Thoughts on generative AI and responsibility

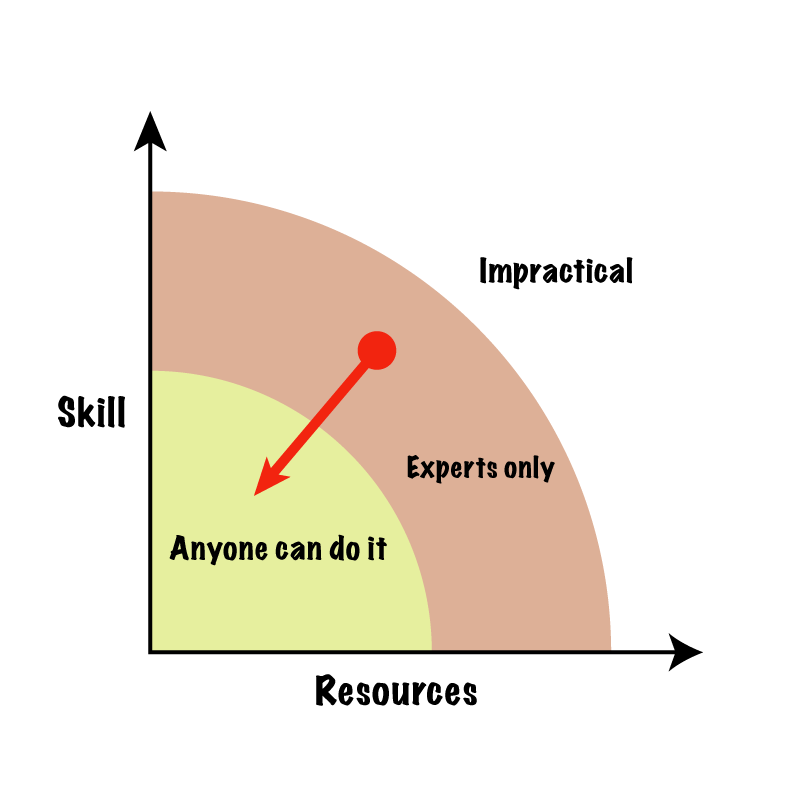

Here’s an illustration of a rudimentary mental model that I use when thinking about advances in computing tools.

Computers are already (essentially) Turing-complete, so anything that can be done can in theory already be done using any existing technology. But what these leaps do is that they bring down the skill and resources needed to accomplish a certain task. The red arrow in the image is what such a leap does.

Generative AI is such a leap. The red dot represents not only something genuinely useful that people used to have to learn a skill or pay someone to do, but can now do with ease (removing a distracting object from a photograph), but also vectors of abuse that similarly gain the same level of ease (crafting misinformation, generating fake revenge porn). With every leap, thousands of these red dots move toward the origin, some good for humanity, and others bad.

The question that’s been on my mind is: how much should tool makers be held responsible for the abuse that are now made easier with the tools they make? After all, these tools are only doing the bidding of the user making their requests. Should knife makers be held responsible for a knife-assisted murder?

From a societal standpoint, I think it’s utterly unethical for anyone to release tools that can be used to harass vulnerable people or create mass disinformation that end in tragedy. But any tool can be abused; so where do we draw that line, and how do we balance it with the good that also arises? Since harm can never be zero, how much harm is acceptable to consider a tool actually good for humanity?

I think it is possible to predict some of the societal impact of new tools. I think it’s pretty safe to say that generative AI will be used for disinformation, spam creation, revenge porn, and other such abuses. For harms we can predict, I think it’s more than fair that society holds tool creators to some (legal) bar to ensure that those harms do not become widespread, while acknowledging that some harm will doubtless still occur.

Sure, novel abuses of the systems will be invented, because abusers can be quite creative. The real harms of advances in computing tools can sometimes only be discovered in retrospect. When those new harms are discovered, it’s important that we react with new regulations to contain them.

What I can’t accept is zero accountability up front, as some people appear to suggest. There is no case in my mind where anyone is justified in making available—and worse, profiting from—a new power tool that causes predictable, widespread harm.